Introducing KubeAI: Open AI on Kubernetes

We are excited to announce the launch of KubeAI, an open-source project designed to deliver the building blocks that enable companies to integrate AI within their private environments. KubeAI is intended to serve as a drop-in alternative to proprietary platforms. With KubeAI you can regain control over your data while taking advantage of the rapidly accelerating pace of innovation in open source models and tools.

Some of the project’s target use cases include:

- Internal Chat UI (PrivateGPT) - Supported

- Secure Code Generation - Supported

- Secure AI Knowledge Base (PrivateRAG) - Coming Soon

- AI integration for industries where data-security is top priority - Reach out!

Initial launch

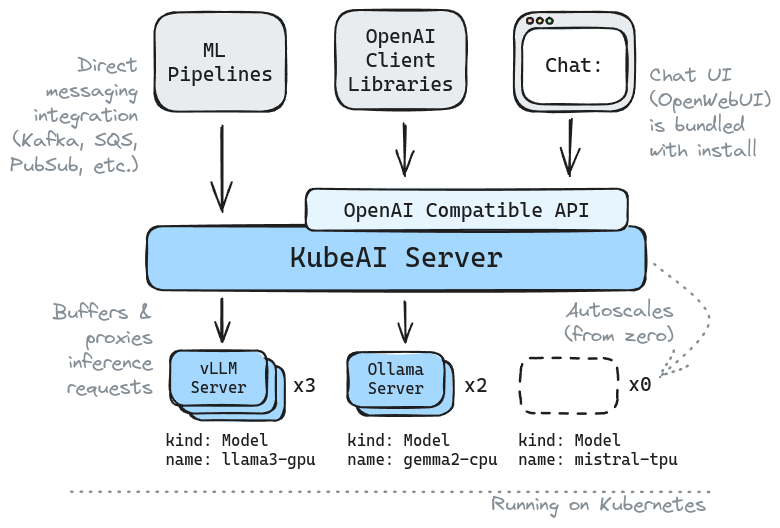

In this initial launch, the project is currently focused on making inference simple and performant by operationalizing vLLM and Ollama on Kubernetes. When you install KubeAI you can select the models that you want to serve from a catalog that includes preconfigured settings, serving on top of GPUs or CPUs.

Design Philosophy

With the the top guiding principle being simplicity we decided to avoid depending on projects like Istio, Knative, or custom kubernetes metrics adapters. Instead, KubeAI has built-in support for features like scale-from-zero and application-metric-based autoscaling.

Up Next

We are looking to quickly expand the scope of the project to include Parameter Efficient FineTuning (PEFT). The goal is to support the lifecycle of LoRA adapters: from managing LoRA jobs through to hot-loading adapters into model servers at inference-time.

We would love to hear from you on what you would like to see built! Shoot on over to the github repo and weigh in on what matters to you. Don't forget to drop us a star!